1. What is a Confusion Matrix Calculator?

Definition: This calculator computes various performance metrics from a confusion matrix, which is a table used to evaluate the performance of a classification model.

Purpose: It helps in assessing the quality of machine learning models, particularly in binary classification problems, by calculating metrics like accuracy, precision, recall, and more.

2. How Does the Calculator Work?

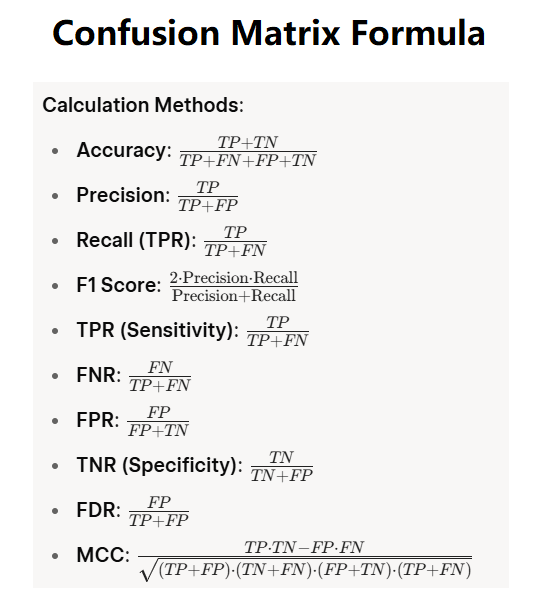

The calculator uses the following formulas based on the four values of the confusion matrix:

- True Positives (TP): Correctly predicted positive cases

- False Negatives (FN): Positive cases incorrectly predicted as negative

- False Positives (FP): Negative cases incorrectly predicted as positive

- True Negatives (TN): Correctly predicted negative cases

Key Metrics Calculated:

- Accuracy: (TP + TN) / (TP + FN + FP + TN)

- Precision: TP / (TP + FP)

- Recall (Sensitivity): TP / (TP + FN)

- F1 Score: 2 * (Precision * Recall) / (Precision + Recall)

- True Positive Rate (TPR): Same as Recall

- False Negative Rate (FNR): FN / (TP + FN)

- False Positive Rate (FPR): FP / (FP + TN)

- True Negative Rate (TNR): TN / (TN + FP)

- False Discovery Rate (FDR): FP / (TP + FP)

- Matthews Correlation Coefficient (MCC): (TP*TN - FP*FN) / sqrt((TP+FP)*(TN+FN)*(FP+TN)*(TP+FN))

3. Importance of Confusion Matrix Calculations

These calculations are essential for:

- Model Evaluation: Providing a comprehensive view of model performance beyond simple accuracy

- Decision Making: Helping choose the right model based on specific needs (e.g., prioritizing precision vs recall)

- Problem Diagnosis: Identifying specific weaknesses in a classification model

4. Using the Calculator

Example:

- TP = 80, FN = 70, FP = 20, TN = 30:

- Accuracy: (80 + 30) / (80 + 70 + 20 + 30) = 110/200 = 0.55 (55%)

- Precision: 80 / (80 + 20) = 0.8 (80%)

- Recall: 80 / (80 + 70) ≈ 0.533 (53.3%)

- F1 Score: (2 * 0.8 * 0.533) / (0.8 + 0.533) ≈ 0.64 (64%)

- MCC: (80*30 - 20*70)/√(100*100*50*150) ≈ 0.11547

5. Frequently Asked Questions (FAQ)

Q: What if all values are zero?

A: The calculator will show an error as no meaningful metrics can be calculated.

Q: Why use multiple metrics instead of just accuracy?

A: Accuracy can be misleading with imbalanced datasets. Other metrics provide a more complete picture.

Q: What's the difference between precision and recall?

A: Precision measures how many selected items are relevant, while recall measures how many relevant items are selected.

Home

Home

Back

Back